Recently, I wrote a defense of the uncontroversial-within-cognitive-science-but-widely-disbelieved idea that the mind is a computer.

That post got me thinking about other ideas that are broadly accepted amongst the expert communities that study them, but not among the general population.1

I agreed with some of the following ideas before I read much about them; for these, the expert consensus reinforced my prior worldview. But for most, I had to be persuaded. Many ideas are genuinely surprising, and one needs to be confronted with a lot of evidence before changing their mind about it.

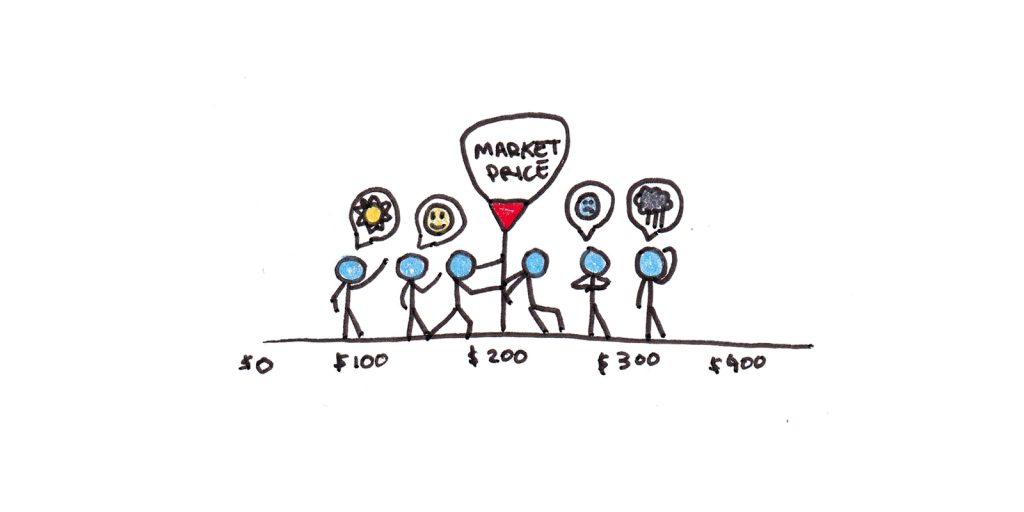

1. Markets are mostly efficient. Most people, most of the time, cannot “beat” the market.

The efficient market hypothesis argues that the price of widely-traded securities, like stocks, reflects an aggregation of all available information about them. This means investors can’t spot “deals” or “overpriced” assets and use that knowledge to outperform the average market return (without taking on more risk).

The mechanism underlying this is simple: Suppose you did have information that an asset was mispriced. You’d be incentivized to buy or short the asset, expecting a better return than the current market would dictate. But this action causes the asset’s price to adjust in the opposite direction, moving it closer to the “correct” value. Taken as a whole, the very action of investors trying to beat the market is what makes it so difficult to beat.

Asset bubbles and stock market crashes are not good evidence against this view. (Of all the people who “predicted” a bubble/crash, how many made money from their prediction?) Nor is that friend you know who seemingly made fantastic returns from crypto/real estate/penny stocks/etc. (That’s usually explained by them taking on more risk, and thus having a higher risk premium, and getting lucky.)

The obvious consequence is that for most retail investors, it’s best to put their money in broad-based index funds to get the benefits of diversification and earn the average market return with few fees.

2. Intelligence is real, important, largely heritable, and not particularly changeable.

This is one that I fought accepting for years. It goes against my beliefs in the value of self-cultivation, practice and learning. However, the evidence is overwhelming:

- Intelligence is one of the most scientifically valid psychometric measurements (far more so than personality, including the dubious Myers Briggs).

- It is positively correlated with many other things we want in life (including happiness, longevity and income!).

- It shows strong heritability, with the g-factor maybe being as much as 85% heritable.

- Finally, few interventions reliably improve IQ, with a possible exception for more education (although it’s not clear this improves g).

I don’t like trotting this argument out. I find it much more appealing to believe in a world where IQ tests don’t measure anything, or they don’t measure anything important, or that any differences are due to education and environment, or that you could improve your intelligence through hard work.

That said, I do think there’s a silver lining here. While your general intelligence may not be easy to change, there’s ample evidence that gaining knowledge and skills improves your ability to do all sorts of tasks. Practically speaking, the best way to become smarter is to learn a lot of stuff and cultivate a lot of skills. Since knowledge and skills are more specific than general intelligence, that may be less than we desire, but it still matters a lot.

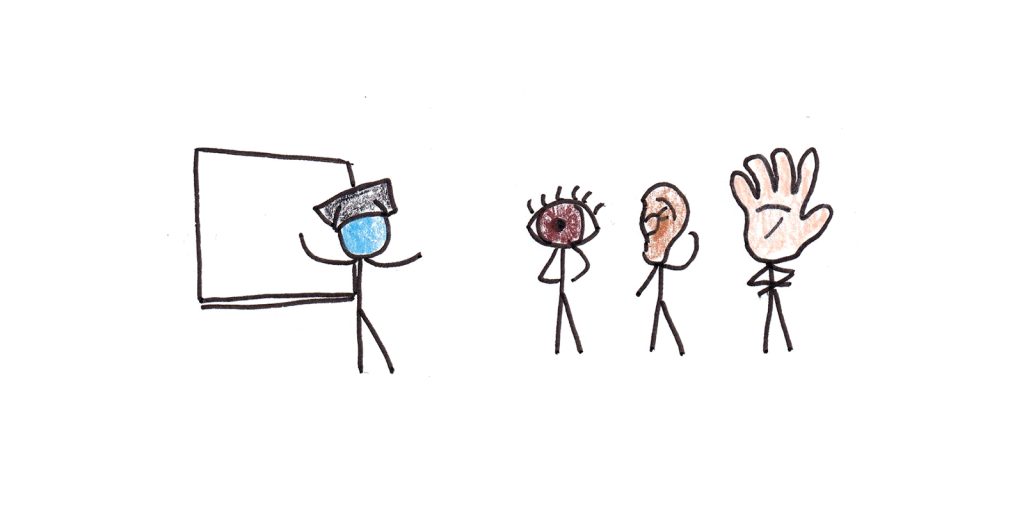

3. Learning styles aren’t real. There’s no such thing as being a “kinesthetic,” “visual,” or “auditory” learner.

I’ve had multiple interviews for my book, Ultralearning, where the host asks me to talk about learning styles. And I always have to, ever so gently, explain that they don’t exist.

The theory of learning styles is an eminently testable hypothesis:

- Give people a survey or test to see what their learning style is.

- Teach the same subject in different ways (diagrams, verbal description, physical model) that either correspond with or go against a person’s style.

- See whether they perform better when teaching matches their learning style and worse when it doesn’t.

Careful experiments don’t find the performance enhancements one would expect based on the theory. Ergo, learning styles isn’t a good theory.

I think part of why this idea survives is that (a) people love the psychological equivalent of horoscopes—this may be why Myers Briggs is so popular despite a lack of scientific support for it as a theory. And, (b) that some people are simply better at visualizing, listening or more dexterous is a truism that seems quite similar to learning styles. But just because you’re better at basketball than math doesn’t mean you’ll learn calculus better if the teacher tries to explain it in terms of three-pointers.

4. The world around us is explained entirely by physics.

Sean Carroll articulates a basic physicist worldview that quantum mechanics and the physics described by the Standard Model essentially explain everything around us.

The remaining controversies of physics, from string theory to supersymmetry, are primarily theoretical issues only relevant at extremely high energies. For everyday life at room temperature, the physics we already know does remarkably well at making predictions.

True, knowing the Standard Model doesn’t help us predict most of the big things we care about—even calculating the effects of interactions between a few particles can be intractable. But, in principle, everything from democratic governance to the beauty of sunsets is contained in those equations.

5. People are overweight because they eat too much. It is also really hard to stop.

The calorie-in, calorie-out model is, thermodynamically speaking, correct. People who are overweight would lose weight if they ate less.

Yet, this is really difficult to do. As I discuss with neuroscientist and obesity researcher Stephan Guyenet, your brain has specific neural circuitry designed to avoid starvation and, by extension, any rapid weight-loss. When you lose a lot of body fat, your hunger levels increase to encourage you to bring it back up.

This is hardly a radical view, but it’s strongly opposed by a particularly noisy segment of online opinion that either tries to explain weight in terms of something other than calories or, conceding that, seems to assert that the problem is simply a matter of applying a little effort.

One reason I’m optimistic about the new class of weight-loss drugs is simply that, as a society, we’re probably heavier than is optimally healthy, and most interventions based on willpower don’t work.

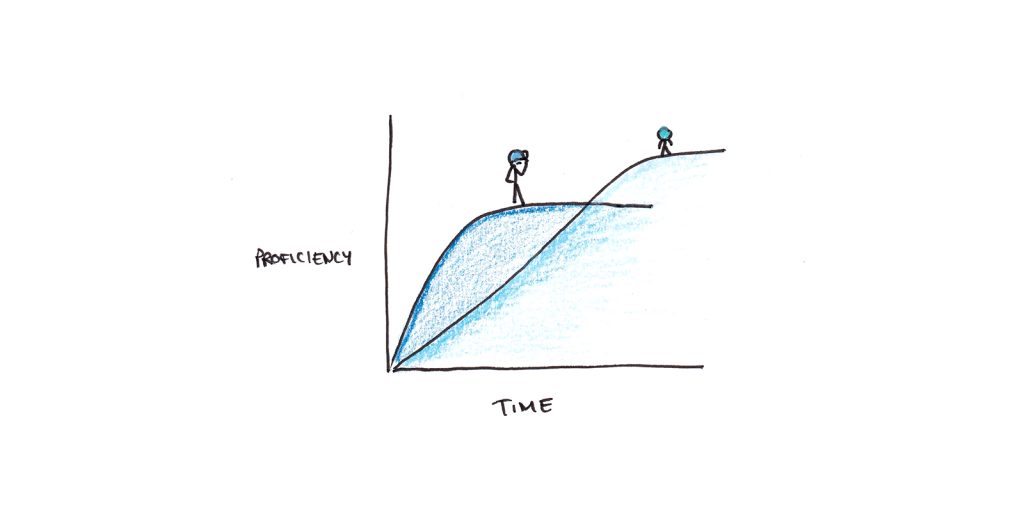

6. Children don’t learn languages faster than adults, but they do reach higher levels of mastery.

Common wisdom says if you’re going to learn a language, learn it early. Children regularly become fluent in their home and classroom language, indistinguishable from native speakers; adults rarely do.

But even if children eventually surpass the attainments of adults in pronunciation and syntax, it’s not true that children learn faster. Studies routinely find that, given the same form of instruction/immersion, older learners tend to become proficient in a language more quickly than children do—adults simply plateau at a non-native level of ability, given continued practice.

I take this as evidence that language learning proceeds through both a fast, explicit channel and a slow, implicit channel. Adults and older children may have a more fully developed fast channel, but perhaps have deficiencies in the slow channel that prevent completely native-like acquisition.

This implies that if you want your child to be completely bilingual, it helps to start early. But that probably requires non-trivial amounts of immersive time in the second language. If they’re only going to a weekly class the way most adults learn, then there may be no special benefit to starting extremely young and maybe even an advantage to starting older.

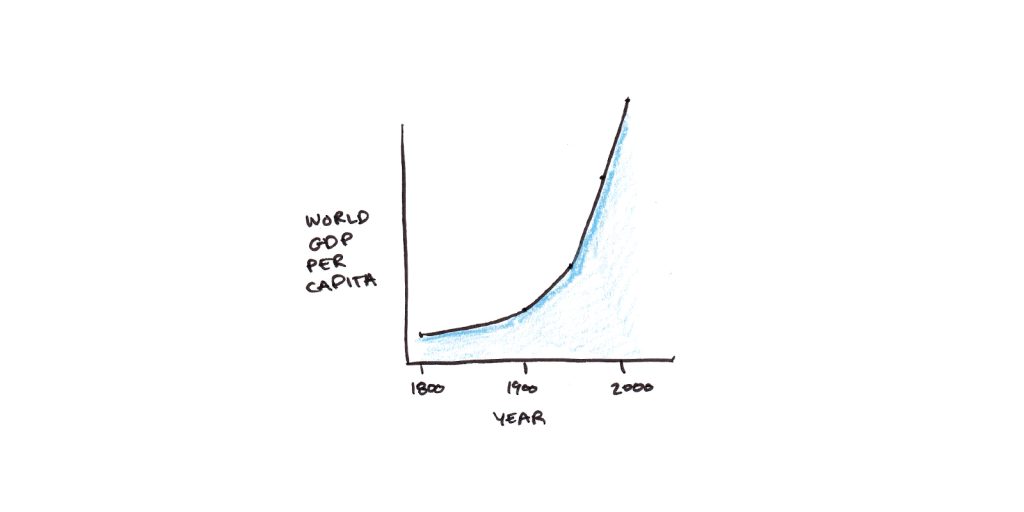

7. We’re better off than our grandparents. We’re vastly better off than our ancestors.

Economic pessimism is fashionable.

Everyone I talk to likes to point out various ways that the current generation (at least in North America, where I reside) has it worse than our parents did: houses and college degrees costs cost more, and it is harder to support a family on a single earner’s paycheck.

But pretty much every economic indicator is positive. Even the indicators that pessimistic pundits like to complain about are largely from areas where wages have been stagnant, or inequality has been rising rather than genuine decline.

The world we live in today is wealthier than that of our parents, and fantastically wealthier than it was a century ago. Economic progress is not everything, but it matters a great deal.

This isn’t a call to stop striving and rest on our laurels; many problems in society still require fixing. But a narrative that begins by denying the material progress that has genuinely been made distorts the task ahead of us.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.

I'm a Wall Street Journal bestselling author, podcast host, computer programmer and an avid reader. Since 2006, I've published weekly essays on this website to help people like you learn and think better. My work has been featured in The New York Times, BBC, TEDx, Pocket, Business Insider and more. I don't promise I have all the answers, just a place to start.